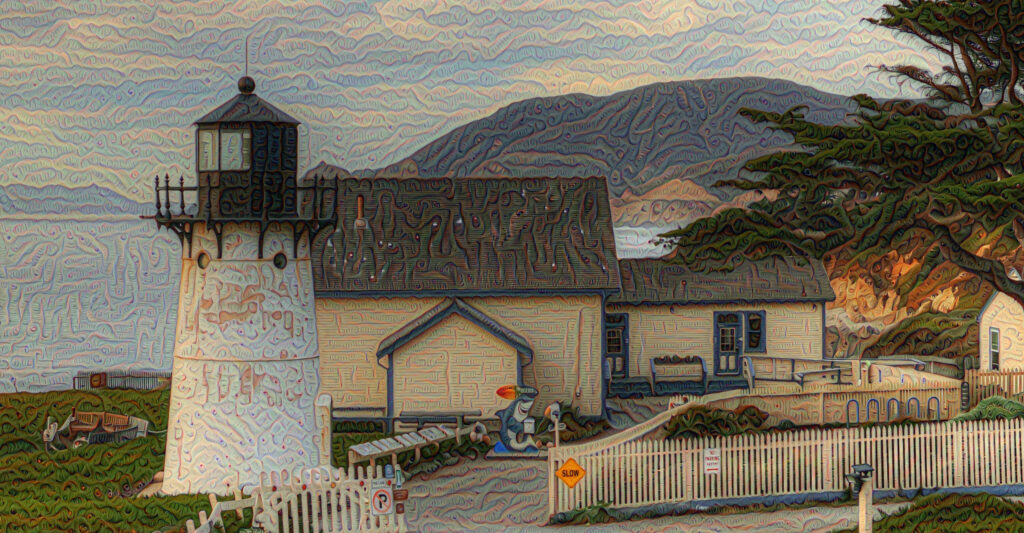

Approaching Daniel Ambrosi’s enormous “Dreamscape” of Point Montara Lighthouse is a disorienting experience that hits you in a series of little shocks. The size alone—sixteen feet wide by eight feet high—stuns. The tumultuous sky of tumbling clouds, shimmering ocean and vivid setting sun feel almost too real. Beyond the glowing clusters of coastal succulents, the path to the lighthouse beckons, and the closer you get to the backlit aluminum frame covered with a seamless fabric print, the more the image knits together and falls apart. Little swirls of unexpected purples and blues, the impressionistic whorls that make up the landscape, come into full focus and profoundly alter how you experience the work. Suddenly, you’re asking yourself, “What am I looking at?”

That’s no accident. In fact, that wavering sense of reality shifting is exactly where the Half Moon Bay artist wants you to be.

“I’m an avid hiker, skier, traveller and a lover of special places,” says Daniel, standing in the corridor of Princeton-by-the-Sea’s Oceano Hotel where Point Montara Lighthouse is currently displayed. “In certain places, the scene before you just knocks you out, takes your breath away, and my attempts to capture that and convey that experience through traditional photography never fully worked. I’m a very analytical guy, and I was always asking myself, ‘What am I missing?’”

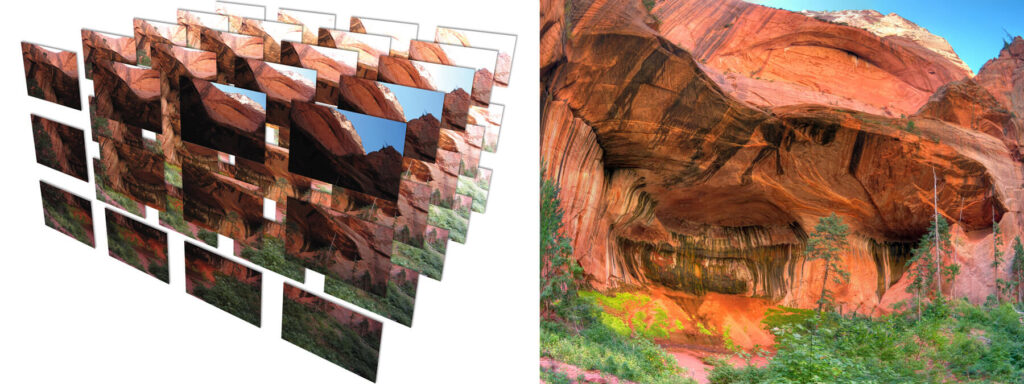

For Daniel, answering that question launched an exhaustive quest, leading him to become a founding creator in the emerging artificial intelligence (AI) art movement. With architecture and 3D computer graphics degrees from Cornell University, he helped pioneer the use of 3D graphics in the architecture industry in Seattle before turning his attention to Silicon Valley. During breaks from his demanding career working in visual and marketing communications, Daniel continued his efforts to capture awe-inspiring views through a camera lens. But it wasn’t until 2011 that he had an epiphany in Zion National Park. He realized that taking single-shot images would never convey the expansive, immersive experience he wanted to share. “We see with a much greater field of view and a more dynamic range of light and shadow than a camera can,” he explains. “The healthy eye sees with an incredible level of detail.”

In a flash of insight, Daniel realized that he could get closer to his vision by combining many pictures into a single scene. He devised a method, which he calls XYZ photography, that involves taking multiple images—horizontally (X), vertically (Y) and with multiple exposures from dark to light (Z)—and then he compresses those pictures into one by applying three different software packages. Daniel felt he’d finally cracked an aspect of the problem he’d been struggling with for years.

Yet, he still wasn’t satisfied. Although his XYZ method provided the visual and visceral moment of breathlessness he sought, he wanted to challenge the viewer to ask deeper questions about the nature of reality itself. “When I see a special place, I feel it in my chest,” he says, leaning forward to express the thought. “When that happens, I wax philosophical: ‘What am I seeing? How would a butterfly see this? What’s real anyway?’ Physics tells us that nothing is solid and seeing is a very subjective thing.” It would take a leap into the world of AI technology to unlock Daniel’s vision and allow him to create the ultimate immersive landscapes that had eluded him.

DeepDream—a computer vision program developed by Google engineers to explore how AI thinks—emerged as the missing piece in Daniel’s puzzle. “Initially, it was a viral phenomenon folks used to turn their family photos into psychedelic nightmares,” he says with a laugh, “but I saw it as an opportunity to take my photography to a place that would evoke a much stronger emotional response and really make you question what it was you were seeing.”

However, DeepDream wasn’t equipped to manage the immense size of Daniel’s XYZ images. He reached out to top Silicon Valley engineers, and Google’s Joseph Smarr and NVIDIA’s Chris Lamb successfully expanded DeepDream’s technological capabilities, super-scaling the software to suit Daniel’s purposes. Using a proprietary version of DeepDream, Daniel finally fulfilled his quest, calling his new works Dreamscapes: A Collaboration of Nature, Man, and Machine.

From the twilight spectacle of Central Park Nightfall that seems to look back at you from eye-like whorls tucked into tree branches and shining out of the pond to the sweeping expanse of Fitzgerald Marine Reserve, which upon closer inspection is shaped by geometric patterns etched in sand and sea, Daniel partners with DeepDream, directing it to access one or several of its many layers to ‘dream’ the image in the direction he wants to go, whether that’s impressionistic, animalistic, or something more surreal. “It’s like collaborating with a partner, because even though I know it’s not sentient, it’s constantly surprising me,” he says. “I can control the direction, but I can’t control the details.”

Given the way Daniel highly processes his images, it’s tempting to think of him as a technical photographer, but he feels more aligned with a different creative discipline. “Unintentionally, the arc of my development of this art paralleled the arc of landscape painting,” he says. “It started with representational landscapes like the Hudson River School painters in the 1800s, and with AI, it started to morph into this new impressionism.” In the manner of Monet’s enormous water lilies or Seurat’s pastorals in pointillism, Daniel’s creations require engagement. “Interaction is vital,” he notes. “If you don’t have the curiosity to get close, you’ll miss the entire thing.”

Daniel’s AI-augmented artworks and grand-format landscape images have been exhibited at international conferences, art fairs and gallery shows with public installations ranging from major tech offices (including Google-SF and Google-NYC) to hotels and medical centers. Private collectors are also discovering Dreamscapes (scalable from 40 x 40 inches to 8 x 16 feet) and Daniel accepts commissioned work as well.

Finally creating the kinds of images he’s always longed to share, Daniel continues to explore what’s possible. While sheltering in place over

the summer, he began experimenting with cubism, in the tradition of Cezanne and Picasso. He has also developed an interest in ‘crypto art,’ where artists can sell single- and multiple-edition digital art works.

As Daniel expands his artistic possibilities and establishes his place in the art world, he has come to a place of gratitude for what he’s been able to accomplish. “The whole motivation was to capture and convey the experience I was having,” he reflects, “and when I see that transfer happen, that’s everything. That’s why I started down this path. It took decades, but it’s satisfying to get to the point where it works.

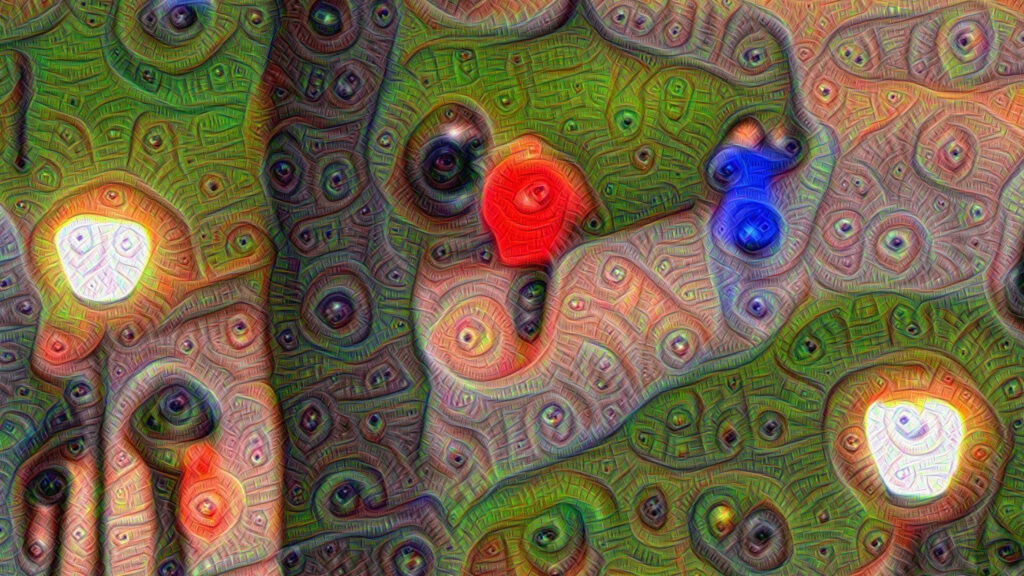

Central Park Nightfall

Full Scene: This is the “dreamed” version of the entire panorama originally captured; the detailed hallucinations are barely visible at this scale.

Close-Up: At this zoom level, the first pass of dreaming is clearly visible; this shows one style of hallucination.

Extreme Close-Up: Even closer up, the second pass of dreaming in an entirely different style is revealed.